In October, the company introduced a VRWorks SDK for application developers that brought physically realistic visuals, sound, touch interactions, and simulated environments into the mix for developers to use in their VR development projects. Nvidia admits that creating VR games is very difficult, especially when trying to meet proper performance targets for each individual headset – everything has to be at 90fps / 11ms, otherwise there is a significant drop in performance.

One way the company wants to help VR creators deliver consistent high-quality, high-framerate experiences is by giving them a software capture utility to analyze individual time slices of gameplay. This, it claims, will help them determine where framerate drops occur most frequently, and how to compensate using a variety of available shading techniques.

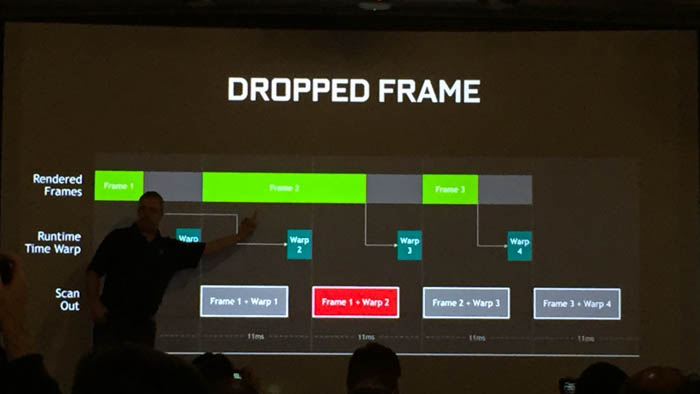

In the VR pipeline, there are a lot of "handshakes" between the game engine, the software runtime, and the headset. In an ideal VR pipeline, the game engine runs, it gets warped or “transformed,” and then the output gets displayed onto the screen. If an explosion happens or when a player turns a corner, the rendering process is a little longer as the renderer is left waiting for its time slice. To make up for the difference, it reselects the prior rendered frame and reprojects it at a slightly different angle. When this happens, the animation becomes a bit chunky though the end result is less studder.

In November, Oculus improved this a bit with the introduction of “synthesized frames” – taking motion vectors from prior frames and reprojecting them at the sub-pixel level. The animation doesn’t freeze or stutter, but objects can still tend to bump into each other.

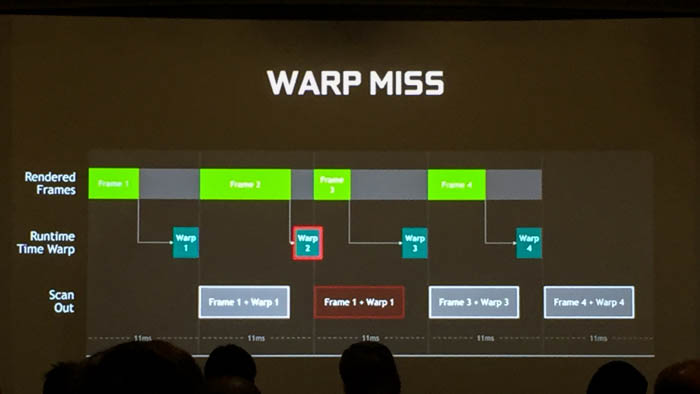

The third thing that can happen in the rendering pipeline is when a frame doesn’t get rendered in time – this is called a “warp miss”. In the graph below, the second time warp “reprojection” takes place as the third frame is going through the pipeline, so it misses the second frame in the scan out to the display.

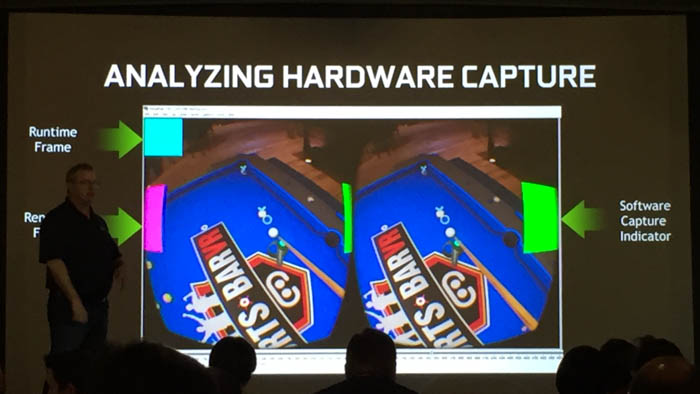

The way to analyze these types of rendering issues is to install a rather expensive capture card to gather data over FRAPS to measure what’s happening on the desktop monitor rather than the VR headset. While these types of utilities focus on frame rate, they don’t measure stutter, hitching or latency, all of which have a noticeable impact on a VR experience.

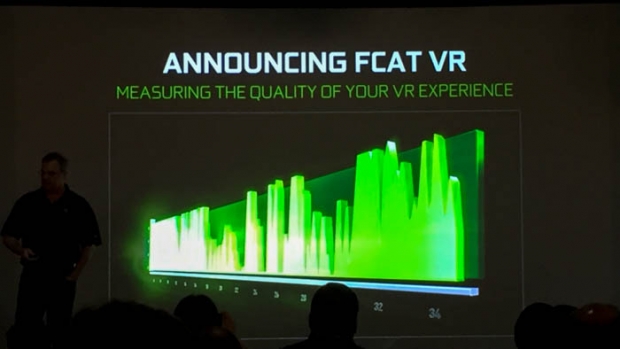

Introducing the FCAT VR frame capture utility

Nvidia decided that hardware capture is generally too much work for most people. It also realized that stutter and latency are critical to measure, as they can degrade the user experience to the point of motion sickness. So the company developed its own software called FCAT VR capture.

FCAT VR (Frame Capture Analysis Tool for VR) is based on the original frame capture original tool from 2013 for benchmarking graphics performance called FCAT. The utility provides, Nvidia claims, comprehensive performance measurement for frame time and stutter without the need for costly external capture hardware.

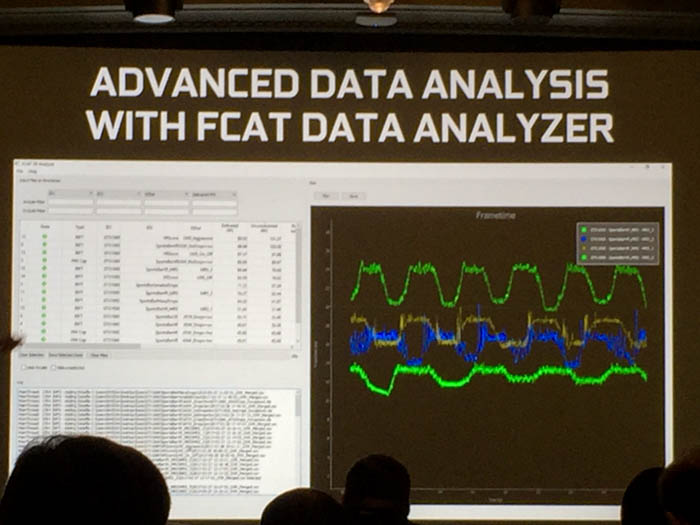

With this utility, Oculus and Vive performance APIs are read through the FCAT VR SW Capture program and output as a .csv file. The software then divides the data into delivered FPS, unconstrained FPS, refresh intervals, new frames, and dropped frames. This will give far different framerate data than what conventional tools like FRAPS are reporting.

As a result, every time slice in VR gameplay can now be plotted in sequence. The idea is to make it easier for reviewers to interpret datasets, and ultimately to make VR much easier to benchmark.

“We all know what good VR “feels” like, but, until now, quantifying that feel was a challenge,” says Nvidia's blog announcement. “Our FCAT VR tool gives developers, gamers and press a tool they can use to better understand what they’re seeing. The result will be more immersive VR.”

The utility will be available for download on Nvidia’s website in mid-March.